As application grow over the time, most of our clients would like to implement CI and CD in their development workflow. It’s a good way to document the process and reduce human error on repetitive work. It’s also a great way to save the cost. We knew lots of folks have used varies tool like Jenkins to automate Continuous Integration and Continuous Delivery workflow. We’d love to introduce a new approach CI and CD with AWS CodePipeline,CodeBuild and CloudFormation.

CodePipeline integrates all popular tools like AWS CodeBuild, GitHub, Jenkins, TeamCity etc. It makes development workflow native to AWSCloud. We were able to “host” our CI and CD flow completely serverless and 24*7 without worrying about paying build server idle time. We were able to implement everything “infrastructure as code” in cloudformation, it makes so much easier to scale up and down on build services. In the post, we will provide a few practical example of using CodeBuild to run unit tests, package source code and build production stack. And also continuously deploy to production stack with CodePipeline and Cloudformation. As always sample code will be given along with the tutorial of CI and CD with AWS CodePipeline.

Tradition vs CI and CD with AWS CodePipeline

Jenkins used to be a dominant player in CI/CD tool. We have worked for a few clients to automate their continuous operation infrastructure from testing, build to deployment. Jenkins has been powerful in its plugin system, you can literally find anything in plugin marketplace. It’s sometime cumbersome for enterprise customer while those plugins don’t have much security compliance.

Later, there are some managed CI/CD tool coming to the market such as Solano labs, TeamCity. When customers moved their stack to AWS Cloud, they want better protection of their digital assets in VPC with reasonable cost effective approach. Fortunately, AWS has released AWS CodePipeline, CodeBuild , which also integrates above tools to make migration seamlessly. You can build and package source code or even test source code in an isolated container securely, then deploy stack to VPC seamlessly. It’s a managed service, which means saving engineer cost on maintaining own build tool like Jenkins or Bamboo. We will try our best to breakdown critical component of CI and CD with AWS CodePipeline in following chapters.

CodeCommit vs GitHub vs BitBucket

AWS CodePipeline has native integration with CodeCommit and third party integration with GitHub, so far it can’t work with Bitbucket yet. Majority of our customers were either using Bitbucket, Github or private git source control tool.

During the course of our initial experiments, code pipeline has the best support for CodeCommit. Even though GitHub integration was claimed by AWS, we had hard time to checkout source code from GitHub organization account from CodePipeline. Currently it only supports Authentication with GitHub username + Personal Access Token. However, GitHub organization account doesn’t allow personal access token, while all enterprise customers were on organization account. You may find some way to customize checkout with lambda function. After some investigation, we found it’s better just mirror source repository to CodeCommit, which has less overhead than other approaches. We could easily switch source code destination in the future.

Mirror Repo to CodeCommit

It’s pretty straight forward to mirror any Git source repository. All you need to do is add a new push origin destination. Official reference documentation is here.

git remote set-url --add --push origin your-repo-url/repo-name

Once the code has been pushed to CodeCommit, rest is very nature to configure in the cloudformation template. Here is the snippet of source stage in CodePipeline.

Stages:

- Name: Source

Actions:

- Name: APISource

ActionTypeId:

Category: Source

Owner: AWS

Version: 1

Provider: CodeCommit

Configuration:

RepositoryName: frugalbot

BranchName: master

OutputArtifacts:

- Name: JavaSource

RunOrder: 1

CodeBuild – “Pay as you go” AWS Build Tool

CodeBuild plays an important role in CI and CD with AWS CodePipeline, it has expanded its footage not only running build and package but also testing tasks since first release. It’s capable of running most of linux command line tasks. CodeBuild has inherited best gene of AWS, the “Pay as you go” cost structure, user only pay per usage. We no longer need worry about idle Jenkins server and build servers. In our example, we use CodeBuild to carry out unit testing, package tasks.

Unit Testing

Testing is a little tricky in our application architecture. We have a handful mock objects in tests , but some of tests still require a database to carry out transactional tests. The first idea into our mind is to spin up a RDS server and kill it at the end of test. Unfortunately, establishing a connection between CodeBuild and RDS hasn’t supported yet. We will have to run a local database to conduct test, it’s not difficult that we can just grab a Postgres container , setup and teardown before&after our test. One step further, we built customize database container on top of Postgres image, it provision a user credentials and database name for unit testing purpose.

CodeBuild-Testing-cloudformation-Snippet

JavaUnitTestCodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Artifacts:

Type: NO_ARTIFACTS

Source:

Location: https://git-codecommit.us-east-1.amazonaws.com/v1/repos/repo-name

Type: "CODECOMMIT"

BuildSpec: |

version: 0.2

phases:

install:

commands:

- apt-get update -y

- apt-get install -y awscli

pre_build:

commands:

- $(aws ecr get-login --region $AWS_DEFAULT_REGION)

- echo **launch unit test db**

- docker run -d -p 5432:5432 --name database xxxxxx.dkr.ecr.us-east-1.amazonaws.com/database:latest

- echo **pull maven repo *****

- docker pull maven:3.5.0-jdk-8

build:

commands:

- echo Build started on `date`

- docker run -i --name maven-test -v "$PWD":/usr/src/repo -w /usr/src/repo --link database:db.xxxx.test maven:3.5.0-jdk-8 bash -c 'mvn clean compile test'

post_build:

commands:

- echo Build completed on `date`

Environment:

ComputeType: "BUILD_GENERAL1_MEDIUM"

Image: "aws/codebuild/docker:1.12.1"

Type: "LINUX_CONTAINER"

Name: !Sub ${AWS::StackName}-frugalbot-backend-unit-test

Description: unit test frugalbot backend java source

ServiceRole: !Ref CodeBuildServiceRole

TimeoutInMinutes: 60

Tags:

- Key: Product

Value: FrugalBot

- Key: SourceType

Value: Java

Package

Move on to code packaging. There are plenty of build&package tool such as maven for java, grunt or gulp for nodejs etc. Fortunately, they are all compatible with linux shell. If it can be run in shell, it will be really straight forward to automate in CodeBuild. Here are the CodeBuild Cloudformation snippet for packaging in maven and grunt.

CodeBuild-maven-cloudformation-Snippet

JavaPackCodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Artifacts:

Location: !Ref ArtifactsS3Location

Name: "java"

Type: "S3"

Source:

Location: https://git-codecommit.us-east-1.amazonaws.com/v1/repos/repo-name

Type: "CODECOMMIT"

BuildSpec: |

version: 0.2

phases:

install:

commands:

- apt-get update -y

- apt-get install -y awscli

pre_build:

commands:

- echo maven version is `mvn -version`

build:

commands:

- echo Build started on `date`

- mvn compile war:war

post_build:

commands:

- echo Build completed on `date`

# Let's store build version in addition for docker container build process

- echo $(basename mvc-*.war) | grep -o "[0-9].[0-9].[0-9].[A-Z]*" | tr -d '\n' > build.txt

- aws s3 cp target/mvc-*.war s3://bucket-name/java/

artifacts:

files:

- build.txt

discard-paths: yes

Environment:

ComputeType: "BUILD_GENERAL1_SMALL"

Image: "maven:3.5.0-jdk-8"

Type: "LINUX_CONTAINER"

Name: !Sub ${AWS::StackName}-frugalbot-backend-package

Description: package frugalbot backend package api

ServiceRole: !Ref CodeBuildServiceRole

TimeoutInMinutes: 60

Tags:

- Key: Product

Value: FrugalBot

- Key: SourceType

Value: Java

CodeBuild-grunt-cloudformation-Snippet

JsPackCodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Artifacts:

Location: !Ref ArtifactsS3Location

Name: "js"

Type: "S3"

Source:

Location: https://git-codecommit.us-east-1.amazonaws.com/v1/repos/repo-name

Type: "CODECOMMIT"

BuildSpec: |

version: 0.2

phases:

install:

commands:

- apt-get update -y

- pip install --upgrade awscli

- echo install ruby

- apt-get install -y ruby-full

- gem install sass

pre_build:

commands:

- echo install utils

- npm install grunt-cli -g

- npm install bower -g

build:

commands:

- echo Build started on `date`

# https://serverfault.com/questions/548537/cant-get-bower-working-bower-esudo-cannot-be-run-with-sudo

- bower install -allow-root

- npm install

- grunt clean prod

post_build:

commands:

- echo Build completed on `date`

# Let's store build version in addition for docker container build process

- echo $(basename build/*.tar.gz) | tr -d '\n' > build.txt

- aws s3 cp build/*.tar.gz s3://bucket-name/js/

artifacts:

files:

- build.txt

discard-paths: yes

Environment:

ComputeType: "BUILD_GENERAL1_SMALL"

Image: "aws/codebuild/nodejs:4.4.7"

Type: "LINUX_CONTAINER"

Name: !Sub ${AWS::StackName}-frugalbot-js-package

Description: frugalbot front-end builder

ServiceRole: !Ref CodeBuildServiceRole

TimeoutInMinutes: 10

Tags:

- Key: Product

Value: FrugalBot

- Key: SourceType

Value: Javascript

With CodeBuild, we no longer need to pay idle time of build server. We can also free up engineer time from maintaining build servers. We may notice we’ve extracted build.txt from package job, we will talk about that in Part 2.

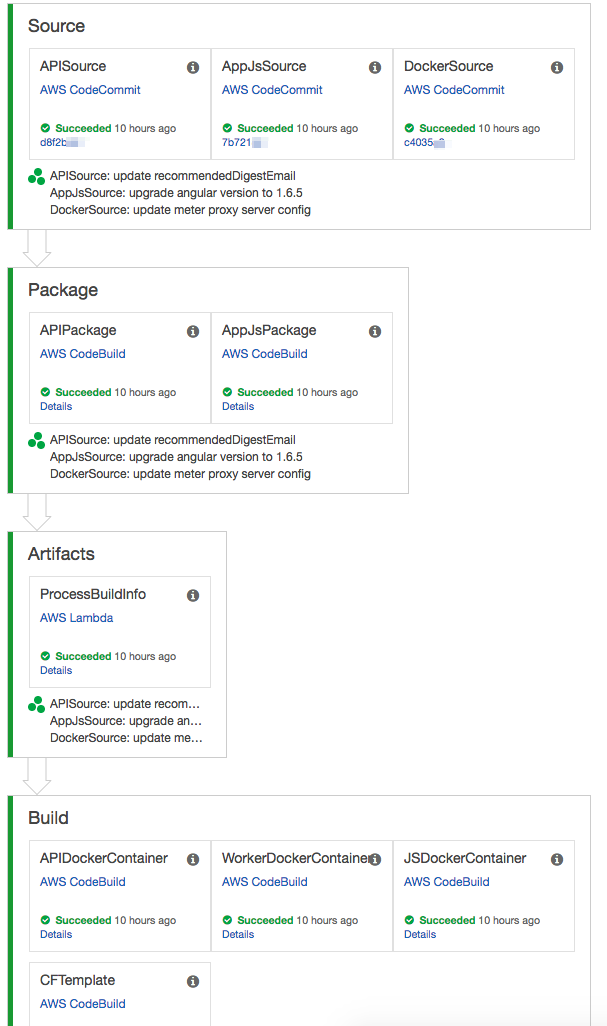

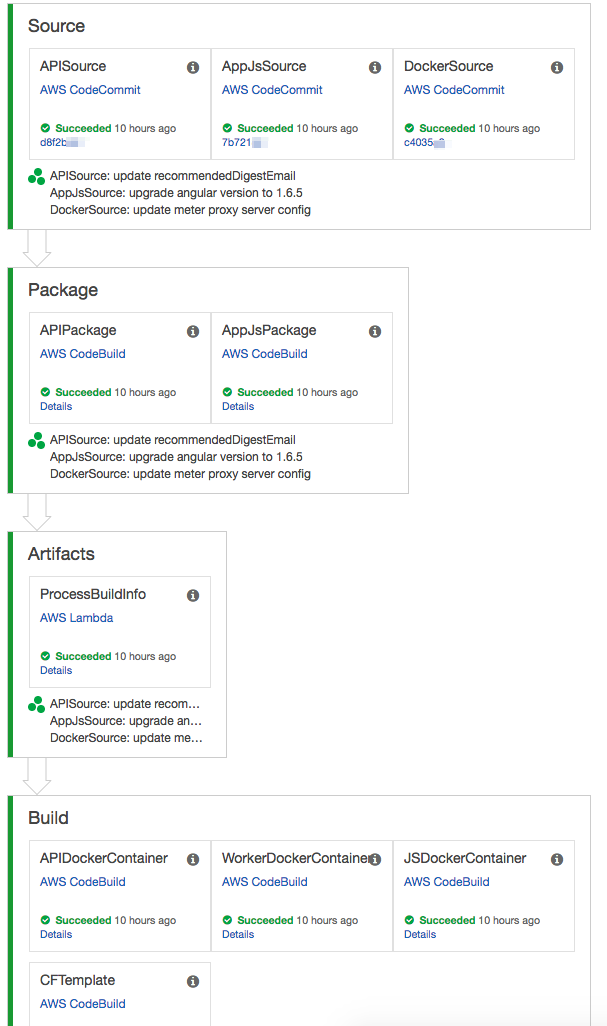

CodePipeline – Streamline Build Workflow on Cloud

Having single automate build job is handy, but common CI/CD has a series of jobs to complete. CodePipeline takes the game to the next level. It offers a simple and straight forward mechanism to execute build jobs based on predefined workflow from source code checkout to deploy.

User can add custom action follow by specific build action, like seeking approval from stakeholder to deploy code or invoke a lambda function to backup logs etc. Our sample is simple workflow, which is triggered by code push to eventually deploy to testing environment. We will talk about certain customization lambda function in Part 2.

Pipeline:

Type: AWS::CodePipeline::Pipeline

Properties:

RoleArn: !GetAtt CodePipelineServiceRole.Arn

ArtifactStore:

Type: S3

Location: !Ref ArtifactsS3Location

Name: !Sub ${AWS::StackName}-frugalbot-qa-Pipeline

Stages:

- Name: Source

Actions:

- Name: APISource

ActionTypeId:

Category: Source

Owner: AWS

Version: 1

Provider: CodeCommit

Configuration:

RepositoryName: frugalbot

BranchName: qa

OutputArtifacts:

- Name: JavaSource

RunOrder: 1

- Name: AppJsSource

ActionTypeId:

Category: Source

Owner: AWS

Version: 1

Provider: CodeCommit

Configuration:

RepositoryName: frugalbot-js

BranchName: master

OutputArtifacts:

- Name: JSSource

RunOrder: 1

- Name: DockerSource

ActionTypeId:

Category: Source

Owner: AWS

Version: 1

Provider: CodeCommit

Configuration:

RepositoryName: FrugalBotContainer

BranchName: master

OutputArtifacts:

- Name: DockerSource

RunOrder: 1

- Name: Package

Actions:

- Name: APIPackage

ActionTypeId:

Category: Build

Owner: AWS

Version: 1

Provider: CodeBuild

Configuration:

ProjectName: !Ref JavaPackCodeBuildProject

InputArtifacts:

- Name: JavaSource

OutputArtifacts:

- Name: JavaPackage

RunOrder: 1

- Name: AppJsPackage

ActionTypeId:

Category: Build

Owner: AWS

Version: 1

Provider: CodeBuild

Configuration:

ProjectName: !Ref JsPackCodeBuildProject

InputArtifacts:

- Name: JSSource

OutputArtifacts:

- Name: JSPackage

RunOrder: 1

- Name: Artifacts

Actions:

- Name: ProcessBuildInfo

ActionTypeId:

Category: Invoke

Owner: AWS

Version: 1

Provider: Lambda

Configuration:

FunctionName: !Ref ProcessBuildInfoFunction

UserParameters: !Ref ArtifactsS3Location

InputArtifacts:

- Name: JavaSource

- Name: JSSource

- Name: JavaPackage

- Name: JSPackage

- Name: DockerSource

OutputArtifacts:

- Name: BuildInfo

RunOrder: 1

- Name: Build

Actions:

- Name: APIDockerContainer

ActionTypeId:

Category: Build

Owner: AWS

Version: 1

Provider: CodeBuild

Configuration:

ProjectName: !Ref ApiContainerCodeBuildProject

InputArtifacts:

- Name: DockerSource

RunOrder: 1

- Name: WorkerDockerContainer

ActionTypeId:

Category: Build

Owner: AWS

Version: 1

Provider: CodeBuild

Configuration:

ProjectName: !Ref WorkerContainerCodeBuildProject

InputArtifacts:

- Name: DockerSource

RunOrder: 1

- Name: JSDockerContainer

ActionTypeId:

Category: Build

Owner: AWS

Version: 1

Provider: CodeBuild

Configuration:

ProjectName: !Ref JsContainerCodeBuildProject

InputArtifacts:

- Name: DockerSource

RunOrder: 1

- Name: CFTemplate

ActionTypeId:

Category: Build

Owner: AWS

Version: 1

Provider: CodeBuild

Configuration:

ProjectName: !Ref CFTCodeBuildProject

InputArtifacts:

- Name: DockerSource

OutputArtifacts:

- Name: Template

RunOrder: 2

CloudFormation – Continuous Delivery to Stack

If you read some of our previous post, we are big on “Infrastructure as code”. It reduced repetitive work cost in operation activity significantly. All automated work has been done for our customers were on either Cloudformation or third-party tool like puppet. We have several posts talking about cost optimization on spot instance cloudformation stack and spotfleet cloudformation stack, you may find practical example over there.

Unique version in each Deploy

Presumably we have created a stack on AWS through cloudformation, what is the best way to update stack? We can choose to update a new cloudformation template in web interface, or run aws-cli, or call api to update stack. It all comes down a newer version of cloudformation. We were stuck until we found this great example, which gave us some insight.

Essentially, we should tag the stack with commit hash as parameters in the Cloudformation. If you deploy containers, you can tag container with commit hash. If you deploy package, you can remark package with commit hash. The first 8 characters commit hash can be unique tag for each deployment. It also make trouble shooting isolating to each version. Here is a ECS container Cloudformation example:

Parameters:

...

JsImageTag:

Description: Please supply javascript container commit hash

Type: String

JavaImageTag:

Description: Please supply api container commit hash

Type: String

...

Resources:

...

apiTaskDefinition:

Type: AWS::ECS::TaskDefinition

DependsOn: escApiALB

Properties:

ContainerDefinitions:

- Name: api

Essential: true

Environment:

- Name: JavaImageTag

Value: !Ref JavaImageTag

Image: !Sub some-container:api_${JavaImageTag}

...

- Name: js

...

Environment:

- Name: JsImageTag

Value: !Ref JsImageTag

Image: !Sub some-container:js_${JsImageTag}

...

CD by overriding parameters

With above example, we just have to update parameters in each deployment by reusing Cloudformation template. It’s pretty simple to update stack since AWS provides extensive SDK and CLI utility. Next thing is to automate the step in CodePipeline. There are a few options in deploy action. One way is using built-in deploy action to override parameters. We found some problem of it working with output artifacts on S3. It works if template.zip is static on S3.

- Name: Deploy

ActionTypeId:

Category: Deploy

Owner: AWS

Version: 1

Provider: CloudFormation

Configuration:

ChangeSetName: Deploy

ActionMode: CREATE_UPDATE

...

TemplatePath: Template::cloudFormation/template.yml

ParameterOverrides: !Sub |

{

"JsImageTag" : { "Fn::GetParam" : [ "BuildInfo", "build.json", "js.hash" ] },

"JavaImageTag" : { "Fn::GetParam" : [ "BuildInfo", "build.json","java.hash" ] },

"DesiredCapacity": "2"

}

InputArtifacts:

- Name: Template

- Name: BuildInfo

RunOrder: 1

We use lambda as custom deploy action since the cloudformation yml is dynamically generated. We will get into more detail in customization lambda function in Part 2. For now, we just assume that “UpdateStackFunction” lambda action has been built, which takes BuildInfo artifacts including hash version of all repos and Template artifacts including cloudformation yml files.

- Name: Deploy

Actions:

- Name: DeployCFT

ActionTypeId:

Category: Invoke

Owner: AWS

Version: 1

Provider: Lambda

Configuration:

FunctionName: !Ref UpdateStackFunction

UserParameters: !Sub

- "{\"StackName\":\"${stack}\",\"exec-role\": \"${role}\",\"cftTemplate\":\"template.yml\"}"

- { role: !GetAtt CloudFormationExecutionRole.Arn, stack: 'stack-name'}

InputArtifacts:

- Name: BuildInfo

- Name: Template

RunOrder: 1

Thus far, we have covered major stage in CI and CD with AWS CodePipeline, source code, testing, package,deploy. We found they are extremely helpful in terms of cost saving and infrastructure documentation. We hope you find those examples and cloudformation snippet helpful. Please feel free to give us your feedback or let us know your question.

Ryo Hang

Solution Architect @ASCENDING