Kubernetes (k8s) is gaining immense popularity in the tech community, with more people now running extensive data workloads on the platform. However, the question arises: Is it realistically achievable to run Spark on Kubernetes? While many clients have successfully managed to do so, they encountered various challenges, particularly related to cost, performance, and scalability.

Today, we are excited to share our experience in enabling one of our client's teams to effectively debug and troubleshoot their complex infrastructure while running Spark on Kubernetes. Despite the obstacles faced, our expertise and tailored solutions helped overcome these issues and achieve a well-functioning setup for their data workloads.

The Challenge:

Running Apache Spark workloads on Amazon EKS (Amazon Elastic Kubernetes Service) indeed offers numerous benefits, but it can come with certain challenges, particularly in terms of transparency into the ETL (Extract, Transform, Load) process. As the Spark jobs grow larger and more complex, it becomes increasingly difficult to pinpoint the exact line of code that went wrong or to understand the runtime behavior of the entire process.

As an engineer, gaining runtime visibility is of paramount importance for effective debugging and troubleshooting. Without clear insights into the code execution and job performance, it becomes challenging to identify "smelling" code or bottlenecks that might be affecting efficiency.

To address this issue and ensure code efficiency, one of the strategies is to set up interactive Spark environments, which empower Spark engineers to experiment with data processing logic and instantly observe results. This feature facilitates efficient iterative development and streamlined debugging, enabling quick identification and resolution of issues. Moreover, it provides a sandbox for performance tuning, optimizing Spark jobs before deployment, and fosters seamless collaboration and knowledge sharing within engineering teams.

Overview - Spark on Kubernetes

When running Spark on Kubernetes, you unlock the potential for efficient resource allocation and scalability, ensuring optimal resource utilization in response to varying workloads. The support for containerization and multi-tenancy enhances isolation and portability, allowing Spark applications to maintain consistent performance across diverse infrastructure environments.

spark-submit can be directly used to submit a Spark application to a Kubernetes cluster. The submission mechanism works as follows:

-

Spark creates a Spark driver running within a Kubernetes pod.

-

The driver creates executors which are also running within Kubernetes pods and connects to them, and executes application code.

-

When the application completes, the executor pods terminate and are cleaned up, but the driver pod persists logs and remains in “completed” state in the Kubernetes API until it’s eventually garbage collected or manually cleaned up

Cluster Mode:

In cluster mode, Spark driver and executor processes are run in separate containers within the Amazon EKS cluster. In this mode, the Spark driver, responsible for managing the Spark application and orchestrating tasks, runs on one or more containers, while the Spark executors, responsible for executing the actual data processing tasks, run on separate containers. Cluster mode is used to run production jobs.

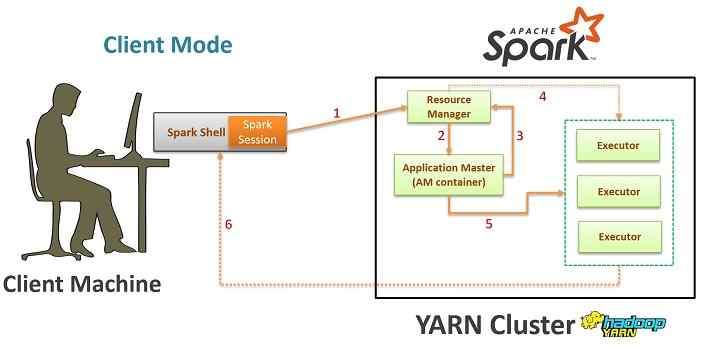

Client Mode:

In client mode, Spark driver runs outside of the Amazon EKS cluster, typically on the client machine from which the Spark application is submitted. In this mode, the Spark driver communicates with the Kubernetes API server to request the execution of Spark executors on the Kubernetes cluster nodes. Client mode is more used for development and debugging jobs.

About ASCENDING

At ASCENDING, we're all about providing cloud-based solutions that fit your unique needs, we understand each client has different technical maturity. As proud partners of AWS, Snowflake, and Microsoft Azure, we've got the latest and greatest technology to boost your speed and efficiency.

ASCENDING Approach:

We have collaborated with numerous customers on their Spark workloads. As the Spark workload expanded, our clients recognized the significance of using the appropriate tools. This not only helped them save on infrastructure costs but also significantly improved the work velocity for their engineers.

Engineers spent hours of waiting for the code deployment without an interactive spark console. In this scenario, Jupyter Notebook proves to be a valuable tool. Nevertheless, there is an alternative approach that we will discuss in separate blog posts: troubleshooting code using an IDE (such as vscode) or engaging in collaborative coding through JupyterPod + CodeServer.

To achieve interactive spark on Amazon EKS, we use JupyterHub on Kubernetes and deploy spark session in client mode inside the Kubernetes cluster. This approach will create a spark driver on the Jupyter Notebook pod inside the Amazon EKS cluster, which avoids extra security rules for executor pods to communicate outside the cluster.

JupyterHub is a multi-user Jupyter Notebook server, it can use Kubernetes as the underlying container orchestration platform. JupyterHub allows users to create and manage their own Jupyter Notebooks in a shared and collaborative environment. You can choose different container images for your Jupyter Notebook pod to have different development environments, for example jupyter/pyspark-notebook includes Python support for Apache Spark. Inside the jupyter-notebook, you can spin up a spark session by deploying spark client mode.

Deploy JupyterHub

Use Helm to deploy JupyterHub in Amazon EKS cluster

helm upgrade --cleanup-on-fail \

--install jupyterhub jupyterhub/jupyterhub \

--namespace jupyterhub \

--version=2.0.0 \

--values values.yaml

We can change values.yaml to use specific image for jupyter notebook pod

singleuser:

image:

name: jupyter/pyspark-notebook

tag: "latest"Deploy headless service for spark driver

To make sure spark driver can communicate with spark executors, we deploy a headless service to expose the spark driver.

# Headless Service

apiVersion: v1

kind: Service

metadata:

name: spark-driver

namespace: jupyterhub

labels:

spark-app-selector: spark-headless-svc

spec:

clusterIP: None

selector:

hub.jupyter.org/username:

component: singleuser-server

ports:

- name: spark-driver-ui-port

port: 4040

targetPort: 4040

- name: blockmanager

port: 7777

targetPort: 7777

- name: driver

port: 2222

targetPort: 2222

Deploy service account for spark

For spark driver to control executor pods, it requires Kubernetes’s permission to create//list/delete pods. We use a service account and Kubernetes’s Role Binding for authorization.

apiVersion: v1

kind: ServiceAccount

metadata:

name: spark

namespace: jupyterhub

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: spark

namespace: jupyterhub

subjects:

- kind: ServiceAccount

name: spark

namespace: jupyterhub

roleRef:

kind: Role

name: spark-role

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: spark-role

namespace: jupyterhub

rules:

- apiGroups: [""]

resources: [pods]

verbs: ["*"]

- apiGroups: [""]

resources: [services]

verbs: ["*"]

- apiGroups: [""]

resources: [configmaps]

verbs: ["*"]

- apiGroups: [""]

resources: [persistentvolumeclaims]

verbs: ["*"]Spin up Spark session

conf = (SparkConf().setMaster("k8s://xxxxxxxxxx:443") # Your EKS address name

.set("spark.kubernetes.container.image", "pyspark:latest") # Spark image name

.set("spark.driver.port", "2222") # Needs to match svc

.set("spark.driver.blockManager.port", "7777")

.set("spark.driver.host", "spark-driver.jupyterhub.svc.cluster.local") # Needs to match svc

.set("spark.driver.bindAddress", "0.0.0.0")

.set("spark.kubernetes.namespace", "jupyterhub")

.set("spark.kubernetes.authenticate.driver.serviceAccountName", "spark")

.set("spark.kubernetes.authenticate.serviceAccountName", "spark")

.set("spark.executor.instances", "2")

.set("spark.kubernetes.container.image.pullPolicy", "IfNotPresent")

.set("spark.app.name", "name"))

Celeste Shao

Data Engineer @ASCENDING

Daoqi

DevOps Engineer @ASCENDING